A few weeks ago, during a spell of unusually dry winter weather, I went to unplug a pair of Grado SR-80 headphones from my iMac. A spark of static electricity leapt from my fingers, I heard a brief crackling sound, and then… [silence]. From that moment forward, the headphone/speaker jack on the back of the Mac has refused to work, and only “Internal Speakers” showed up in the System Preferences Sound panel. My trusty work Mac had gone mute.

My only options were either to send the Mac in for repair or switch to USB audio output. I couldn’t afford to be without the Mac, and I was interested in hearing what kind of audio upgrade I’d get by bypassing the Mac’s internal Digital Audio Converter (DAC), so I hit up an audiophile friend for recommendations. Hit the jackpot when he suggested the NuForce μDAC (aka microDAC) – a handsome $99 outboard DAC smaller than a pack of smokes.

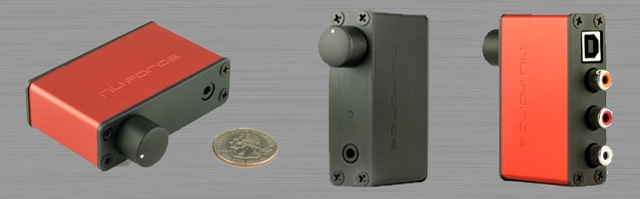

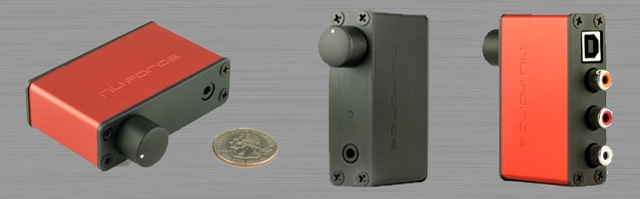

The unit arrived a few days later, and turned out to be even smaller than expected (around 3″x1″). The two-tone rust and flat-black anodized aluminum casing looked distinguished, and well-crafted; NuForce really put some effort into the aesthetics on this one. The design is simple, with no unnecessary controls. Just a volume knob and a headphone output jack, nothing more.

I was blown away from the moment I plugged it in and enabled it in the Sound prefs Output panel. Digital audio has never sounded better on a computer I’ve owned. But since the original analog jack was fried, I had no way to directly compare the quality of the Mac’s native DAC with the new outboard. Today I sat down at someone else’s work Mac and did some A/B testing.

For the test, I chose two recordings:

- Sonny Rollins: “I’m an Old Cowhand” (from Way Out West)

- Beatles: “Because” (from Abbey Road 2009 Stereo Remaster)

(I chose these two because A) I love them and B) I had them on hand at 256kbps AAC, for best possible resolution).

Note: I appreciate great-sounding audio, but I’m far from a hardcore audiophile. For a balls-out audio tweak’s perspective on the μDAC, see HeadphoneAddict’s review at head-fi.org.

Just a few minutes into Cowhand, I noticed something I’d never heard before: The sound of the cork linings of the valves of Rollins’ saxophone tapping away as he played. It was subtle, but it had been there in the recording all along – I had just never noticed it. And that’s exactly the point – the differences are subtle, and you may not notice all of them unless you’re listening for them, but they’re present. And that subtlety adds up to an overall experience that’s simply more realistic, more nuanced than what you get with the cheaper DAC built into consumer PCs. It’s all about presence.

Likewise, I found the harmonies in Because fuller, richer, more bodied than they sounded through the Mac’s native DAC. The French horns far more alive and breathy, the harpsichord more twangy. Virtually everything about these two tracks sounded more engaging.

Another thing I noticed: Usually, near the end of a long day writing code, I feel the need to take the headphones off and rest my ears. I didn’t have that sensation today. I can’t say for sure, but I suspect that more natural sound is less fatiguing to the ears (and the brain’s processor).

One caveat: Because there’s no longer an analog sound channel for the computer to manipulate, you’ll lose the ability to control volume or to mute from the Mac’s keyboard. Apparently this is not true of all DACs – the driver for m-audio boxes does allow volume and mute control from the Mac keyboard, so the issue must rest in the generic Mac USB audio driver (the NuForce unit doesn’t come with an installable driver – it’s plug-and-play). In any case, the keboard habit has been ingrained for so many years I don’t even think about it, so retraining myself to adjust audio from the μDAC’s volume knob took some getting used to. However, you can still use the volume control in iTunes itself, and it may be possible to re-map the keyboard’s audio control keys to tweak iTunes’ internal volume directly.

It’s no secret that you can get better sound quality out of almost any computer by routing around the built-in audio chipset. There’s just no way Apple (or Dell, or anyone else) is going to spend more than a few dollars on high-end audio circuitry when most people are perfectly happy with 128kbps MP3s played through cheap-o speakers, and every penny counts in manufacturing bottom lines. But using an outboard DAC for signal conversion can be an expensive proposition, not to mention involving bulky, inelegant, desk-cluttering plastic boxes. The NuForce μDAC gives you high-end computer audio that’s both affordable and elegant.

Another benefit: If you’ve been considering using a dedicated digital audio file player like an AudioRequest connected to the home stereo, you’ll end up having to migrate and store another copy of your audio library, not to mention add more cabling and componentry to your entertainment center. With something like the NuForce μDAC, you can leave everything on your main computer and just route high-fidelity audio to the stereo.

In any case, the NuForce μDAC is one of the best c-notes I’ve dropped on audio gear over the years. Recommended even if you haven’t fried your analog port.

Update: This article has been republished at Unclutter.com.

Loose notes from the SXSW 2010 session Coding for Pleasure: Developing Killer Spare-Time Apps, hosted by :

Loose notes from the SXSW 2010 session Coding for Pleasure: Developing Killer Spare-Time Apps, hosted by :

Four years ago, long before Time Machine and the wide availability of cloud storage, I

Four years ago, long before Time Machine and the wide availability of cloud storage, I

Blogs are 100% public. Twitter is 100% public. Posting on newsgroups and forums is 100% public. The web in general is a public space. I’m wondering WHY there are such dramatically different expectations on Facebook than everywhere else. Fine-grained control over exactly who gets to see exactly what? All of this comes down to a single problem: Millions of people apparently want to have a web presence and yet be private at the same time. Everywhere else online, it’s one or the other.

Blogs are 100% public. Twitter is 100% public. Posting on newsgroups and forums is 100% public. The web in general is a public space. I’m wondering WHY there are such dramatically different expectations on Facebook than everywhere else. Fine-grained control over exactly who gets to see exactly what? All of this comes down to a single problem: Millions of people apparently want to have a web presence and yet be private at the same time. Everywhere else online, it’s one or the other.

Update, April 2016: Since iTunes 12 and Apple Music, I now store my entire collection in the cloud and am able to access/control it from anywhere, easily, and to redirect the output to any AirPlay device. So the notes below are no longer relevant.

Update, April 2016: Since iTunes 12 and Apple Music, I now store my entire collection in the cloud and am able to access/control it from anywhere, easily, and to redirect the output to any AirPlay device. So the notes below are no longer relevant. Alternative 2: Third-party software. There are a few shareware packages available in this niche, but the only one I found that worked reliably was Jonathan Beebe’s open source

Alternative 2: Third-party software. There are a few shareware packages available in this niche, but the only one I found that worked reliably was Jonathan Beebe’s open source